From (Walpole et al., 2017):

Concept of a Random Variable

A statistical experiment is any process that generates several chance observations. In many cases, we need to assign numerical values to the outcomes of such experiments. For example, when testing

where

We can assign a numerical value to each outcome representing the number of defectives:

Definition:

A random variable is a function that associates a real number with each element in the sample space.

We use capital letters (e.g.,

Discrete and Continuous Sample Space

Definition:

If a sample space contains a finite number of possibilities or a countable sequence (equivalent to the set of whole numbers), it is called a discrete sample space.

Some experimental outcomes cannot be counted. For instance, when measuring the distance a car travels on

Definition:

If a sample space contains an infinite number of possibilities equivalent to the points on a line segment, it is called a continuous sample space.

A discrete random variable has a countable set of possible outcomes, while a continuous random variable can take values on a continuous scale (an interval of numbers).

Discrete Probability Distributions

A discrete random variable takes specific values with certain probabilities. For example, when tossing a coin

We can represent the probabilities of a random variable

Probability Distribution

Definition:

The set of ordered pairs

is called the probability function, probability mass function, or probability distribution of the discrete random variable if, for each possible outcome :

Example: Probability Distribution

If a car agency sells

of its inventory of a certain foreign car equipped with side airbags, find the probability distribution of the number of cars with side airbags among the next cars sold. Solution:

Since the probability of selling a car with side airbags is 0.5, thepossible outcomes are equally likely. Let be the number of cars with side airbags sold. The event of selling cars with side airbags and without can occur in ways. The probability distribution is:

Computing the values:

Cumulative Distribution Function

For many problems, we need to determine the probability that a random variable

Definition:

The cumulative distribution function

of a discrete random variable with probability distribution is:

Example: Cumulative Distribution Function

Find the cumulative distribution function of the random variable

in the previous example. Using , verify that . Solution:

From the previous calculations, we have:Therefore:

We can verify that:

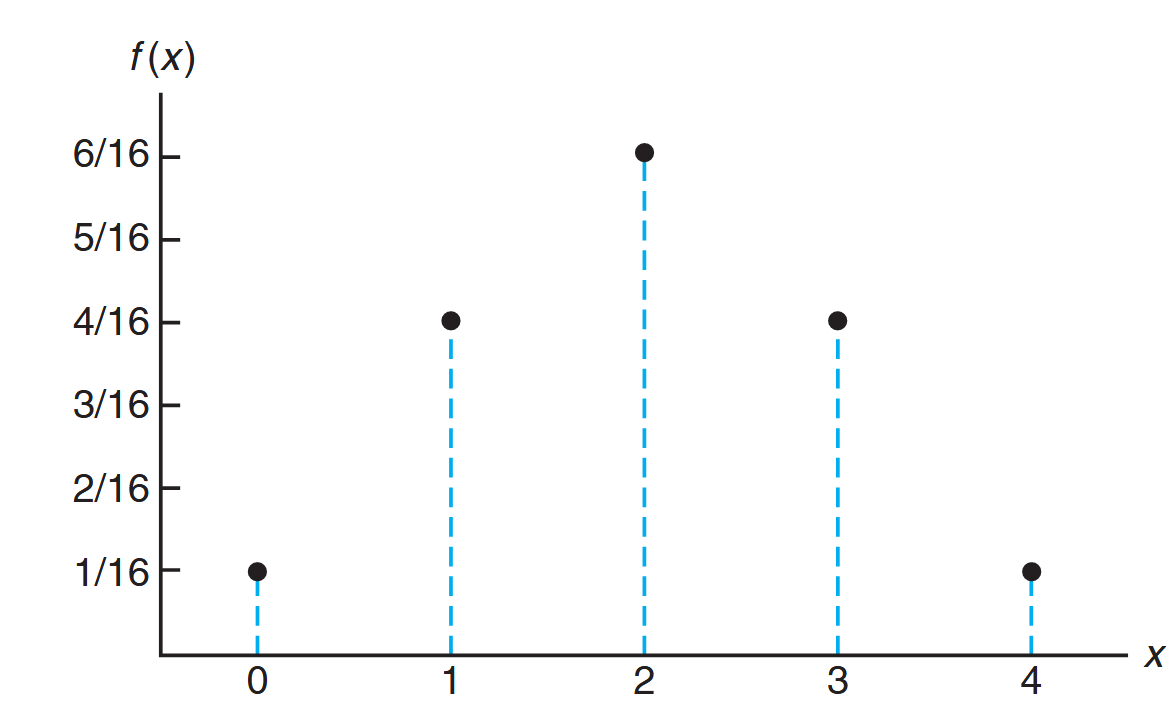

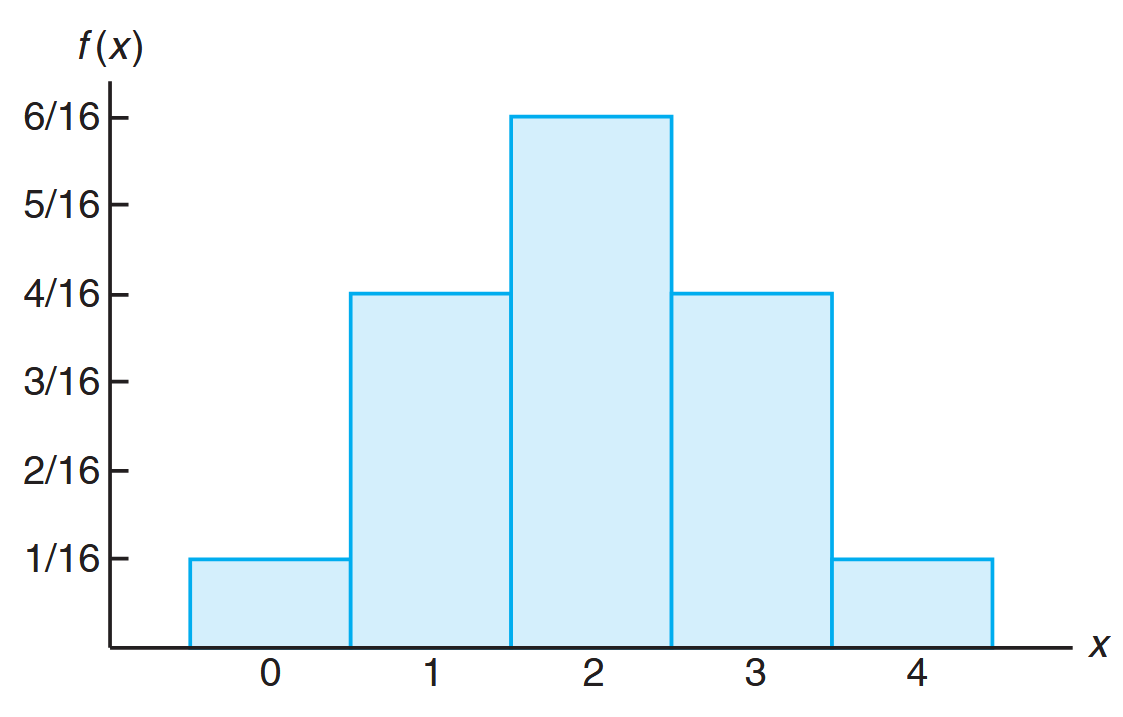

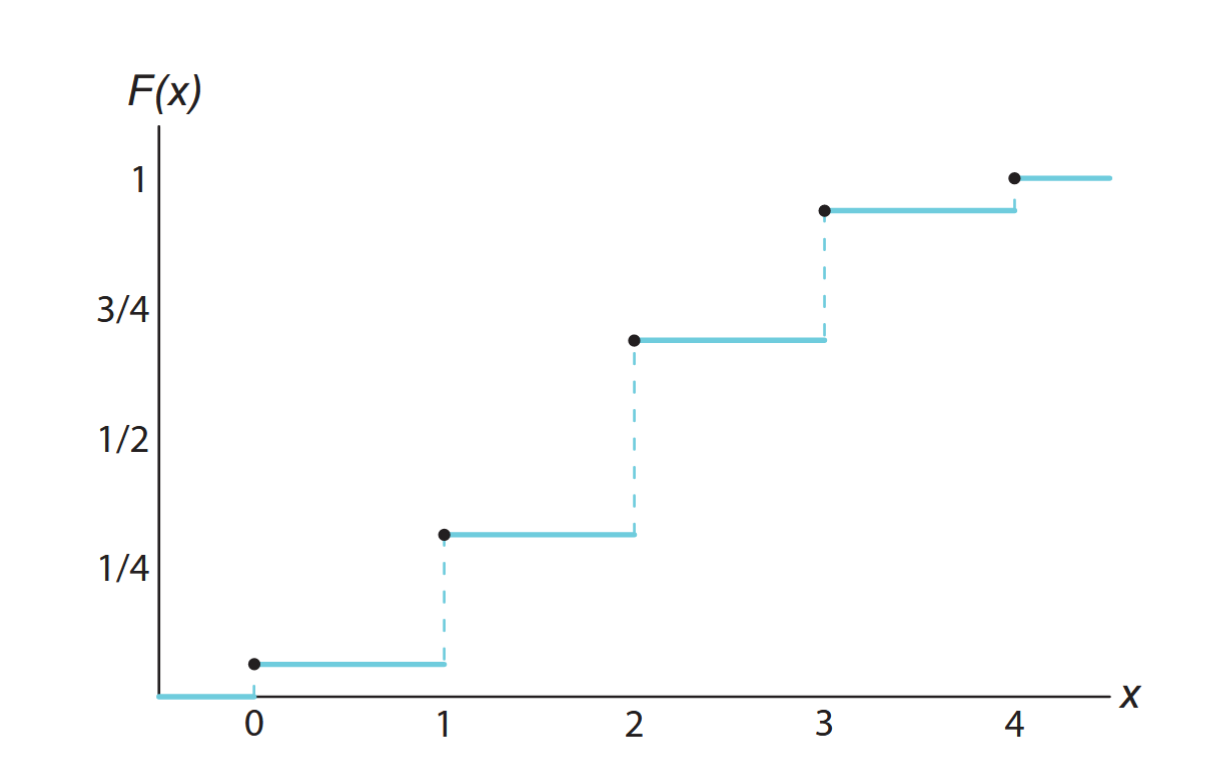

Visualizing probability distributions is often helpful. Here are graphical representations:

Probability mass function plot. (Walpole et al., 2017).

Probability histogram. (Walpole et al., 2017).

Discrete cumulative distribution function. (Walpole et al., 2017).

Continuous Probability Distributions

Unlike discrete random variables, a continuous random variable has a probability of

This might seem startling initially, but becomes more plausible when we consider a specific example. Consider a random variable representing the heights of all people over

However, the probability of selecting a person who is at least

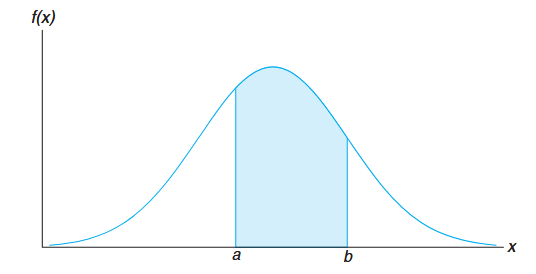

For continuous random variables, we compute probabilities for intervals such as

That is, it doesn’t matter whether we include an endpoint of the interval or not, since

Probability Density Function

Although the probability distribution of a continuous random variable cannot be presented in tabular form, it can be stated as a formula. Such a formula is a function of the numerical values of the continuous random variable

Definition:

For continuous variables,

is called the probability density function, or simply the density function, of .

Since

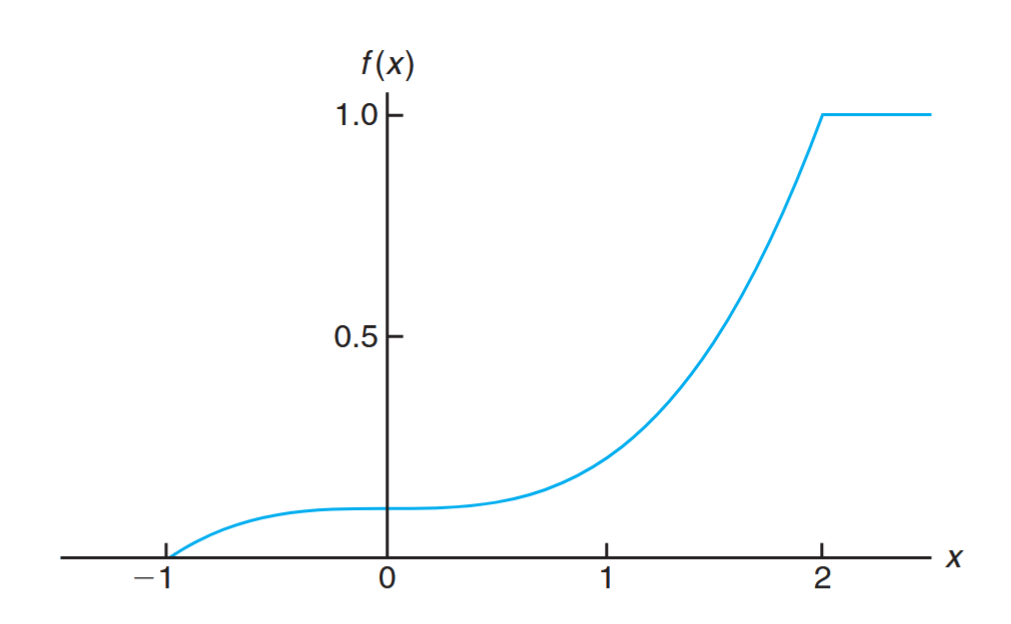

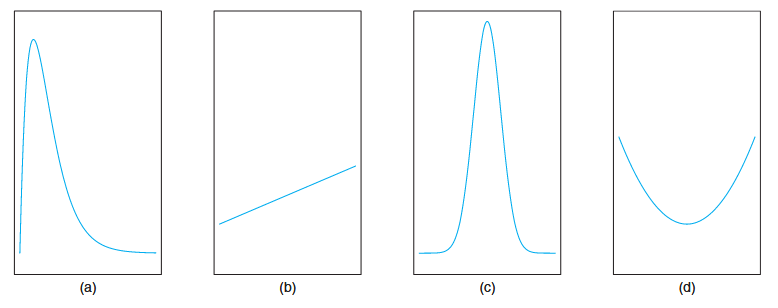

Typical density functions. (Walpole et al., 2017).

Because areas represent probabilities (which are positive), the density function must lie entirely above the

A probability density function is constructed so that the area under its curve bounded by the

The probability that

Definition:

The function

is a probability density function (pdf) for the continuous random variable , defined over the set of real numbers, if:

, for all . . .

Example: Temperature Error

Suppose that the error in the reaction temperature, in

, for a controlled laboratory experiment is a continuous random variable having the probability density function:

- Verify that

is a density function. - Find

. Solution:

- Obviously,

. To verify condition 2, we have:

- Using formula 3, we obtain:

Cumulative Distribution Function

Similarly to discrete random variables, we can define a cumulative distribution function for continuous random variables.

Definition:

The cumulative distribution function

of a continuous random variable with density function is:

As a consequence of this definition, we can write:

and if the derivative exists:

Example: Using the CDF

For the density function of the previous example, find

, and use it to evaluate . Solution:

For

, Therefore:

Now we can find:This agrees with our result using the density function directly.

Example: Bidding Process

The Department of Energy (DOE) puts projects out on bid and generally estimates what a reasonable bid should be. Call the estimate

. The DOE has determined that the density function of the winning (low) bid is: Find

and use it to determine the probability that the winning bid is less than the DOE’s preliminary estimate . Solution:

For

, Thus:

To find the probability that the winning bid is less than the preliminary bid estimate

:

Mean of a Random Variable

The mean of a random variable represents the “center” or expected value of its probability distribution. Consider this example: If two coins are tossed

This average value (

This average value is called the mean of the random variable

Definition:

Let

be a random variable with probability distribution . The mean, or expected value, of is: For a discrete random variable:

For a continuous random variable:

Variance and Covariance of Random Variables

Variance of Random Variables

While the mean describes the center of a probability distribution, it doesn’t tell us about how spread out the values are. The variance measures this dispersion or variability.

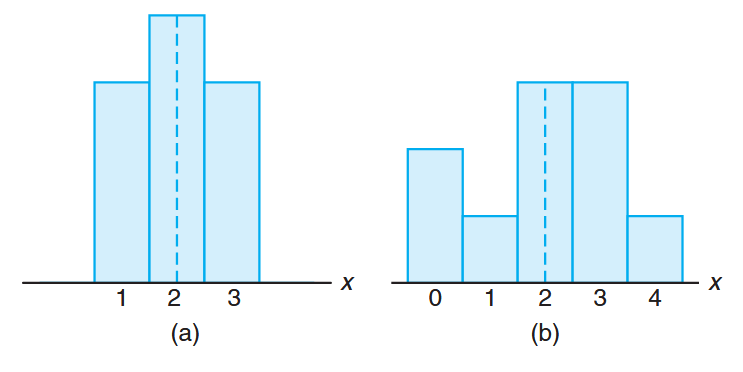

Two distributions can have the same mean but very different spreads around that mean:

Distributions with equal means and unequal dispersions. (Walpole et al., 2017).

Definition:

Let

be a random variable with probability distribution and mean . The variance of is: For a discrete random variable:

For a continuous random variable:

The positive square root of the variance,

, is called the standard deviation of .

The term

Example: Comparing Variances

Let the random variable

represent the number of automobiles used for official business purposes on any given workday. The probability distributions for two companies are: Company A:

Company B:

Compare the variances of both distributions.

Solution:

For company A:Then:

For company B:

Then:

Although both distributions have the same mean (

), company B has a significantly larger variance ( ), indicating greater variability in the number of automobiles used daily.

An alternative formula for calculating variance that often simplifies computations is:

Theorem:

The variance of a random variable

is:

Example: Using the Alternative Formula

Let

represent the number of defective parts when parts are sampled from a production line. The probability distribution is: Calculate the variance using the alternative formula.

Solution:

First, we compute the mean:Next, we find

: Therefore:

Covariance of Random Variables

The covariance measures the relationship between two random variables, indicating how they vary together.

Definition:

Let

and be random variables with joint probability distribution and means and . The covariance of and is: For discrete random variables:

For continuous random variables:

A positive covariance indicates that the variables tend to move in the same direction, while a negative covariance suggests they move in opposite directions. Zero covariance indicates no linear relationship between the variables.

Linear Combinations of Random Variables

The following properties simplify calculations for means and variances of linear combinations of random variables.

Theorem:

If

and are constants, then: Special cases:

- Setting

,

- Setting

,

Theorem:

The expected value of the sum or difference of two or more functions of a random variable

is the sum or difference of the expected values of the functions. That is,

Example:

Let

be random variable with probability distribution as follows: Applying the theorem above to the function

, we can write We know that

, and by direct computation, Hence,

Theorem:

If

and are random variables with joint probability distribution and , and are constants, then Special cases:

- Setting

:

- Setting

:

- Setting

and , we see that

- If

are independent random variables, then

Example:

If

and are random variables with variances and and covariance , find the variance of the random variable . Solution:

Exercises

Exercise 1

A farmer found a bottle with

Part a

Find the probability function of

Solution:

We need to find

For

For

- First genie must not grant wishes:

- Second genie must grant wishes:

For

- First two genies must not grant wishes:

- Third genie must grant wishes:

For

- First three genies must not grant wishes:

- Fourth genie must grant wishes:

The complete probability distribution is:

Part b

Calculate

Solution:

First, we calculate the expected value:

To find the standard deviation, we first calculate

Now we can calculate the variance:

And the standard deviation:

The expected number of genies that need to be released until finding a wish-granting genie is