From (Walpole et al., 2017):

Binomial and Multinomial Distributions

Many statistical experiments follow similar patterns, allowing us to describe their behavior with standardized probability distributions. One of the most common and useful distributions is the binomial distribution.

For example, when testing the effectiveness of a new drug, the number of cured patients among all treated patients approximately follows a binomial distribution.

The Bernoulli Process

A Bernoulli process consists of repeated trials where each trial has exactly two possible outcomes, commonly labeled “success” and “failure”. Examples include testing electronic components (defective vs. non-defective) or flipping coins (heads vs. tails).

Formally, a Bernoulli process must satisfy these properties:

- The experiment consists of repeated trials

- Each trial results in exactly two possible outcomes: “success” or “failure”

- The probability of success, denoted by

- All trials are independent of each other

Consider selecting

If the process produces

Similar calculations for all outcomes yield the probability distribution:

Binomial Distribution

The number

Theorem:

In a Bernoulli process with success probability

and failure probability , the probability distribution of the binomial random variable (the number of successes in independent trials) is:

The formula can be derived as follows:

- The probability of

- The number of different ways to arrange

- Multiplying these gives the total probability of exactly

Example: Binomial Calculation

The probability that a certain component will survive a shock test is

. Find the probability that exactly of the next components tested survive. Solution:

Withtrials, success probability (survival), and successes, we have:

Mean and Variance of Binomial Distribution

The binomial distribution’s parameters directly determine its mean and variance.

Theorem:

The mean and variance of the binomial distribution

are: where

This makes intuitive sense:

- The mean

- The variance

Example: Impurity Testing

It is conjectured that impurities exist in

of drinking wells in a rural community. To investigate, wells are randomly selected for testing.

- What is the probability that exactly

wells have impurities? - What is the probability that more than

wells are impure? Solution:

With, , and :

- For exactly

impure wells: b(3;10,0.3) = \binom{10}{3}(0.3)^3(0.7)^7 = 120 \cdot 0.027 \cdot 0.0824 = 0.2668

Hypergeometric Distribution

The binomial distribution assumes that each trial is independent with a constant probability of success. However, in sampling without replacement, this assumption doesn’t hold, as the probability of success changes after each selection. For these scenarios, we use the hypergeometric distribution.

Theorem: Hypergeometric Distribution

The probability distribution of the hypergeometric random variable

, representing the number of successes in a random sample of size selected from items (of which are labeled “success” and are labeled “failure”), is:

The hypergeometric formula can be understood as:

Example: Laptop Computers

A shipment of

laptop computers to a retail outlet contains that are defective. If a school randomly purchases of these computers, find the probability distribution for the number of defectives. Solution:

Letbe the random variable representing the number of defective computers purchased. With total computers, defective computers, and a sample size of , can take values , , or . For

(no defective computers): For

(one defective computer): For

(two defective computers): Therefore, the probability distribution of

is:

Mean and Variance of Binomial Distribution

Theorem:

The mean and variance of the hypergeometric distribution

are

Negative Binomial and Geometric Distributions

In some experiments, we repeat independent trials (each with probability

Example:

Suppose a drug is effective in

of cases. What is the probability that the fifth patient to experience relief is the seventh patient to receive the drug? Solution:

A possible sequence:, with probability .

The number of ways to arrangesuccesses and failures in the first trials is .

Thus,.

Negative Binomial Distribution

Definition:

If a random variable

(number of trials needed to achieve successes) follows the negative binomial distribution. Its probability mass function is: where

is the probability of success, , is the number of successes, and is the trial on which the -th success occurs.

Example:

In an NBA championship, the first team to win

games out of wins the series. Suppose that team has probability of winning a game.

What is the probability that team

will win the series in games? What is the probability that team

will win the series? Solution:

Plugging into the formula:

- Now we need to do the above

times:

Geometric Distribution

A special case of the negative binomial distribution is when

Definition:

If a repeated independent trails can result in a success with probability

and a failure with probability , then the probability distribution of the random variable , the number of the trail on which the first success occurs, is

Example:

- If

in items is defective ( ), the probability that the -th item inspected is the first defective is - If the probability of a successful phone call is

, the probability that attempts are needed is

Theorem:

The mean and variance of a random variable following the geometric distribution are

Poisson Distribution and the Poisson Process

A Poisson experiment involves recording the number of times a certain event (random variable

A Poisson experiment is based on the Poisson process, which has these key properties:

- Independence: The number of events in one time interval or region is independent of the number in any other non-overlapping interval or region. This means the process has no memory.

- Proportionality: The probability of a single event occurring in a very short time interval or small region is proportional to the length or size of that interval or region, and does not depend on events outside it.

- Negligible Multiple Events: The chance of more than one event occurring in such a short interval or small region is so small it can be ignored.

The random variable

The average number of events is given by:

where:

The probability of observing exactly

Definition:

The probability distribution of the Poisson random variable

, representing the number of outcomes occurring in a given time interval or specified region denoted by , is Where

is the average number of outcomes per unit time, distance, area, or volume and

The Poisson probability sums is defined as:

Example: Radioactive Particles

During a laboratory experiment, the average number of radioactive particles passing through a counter in

millisecond is . What is the probability that particles enter the counter in a given millisecond? Solution:

Using the Poisson distribution withand :

Example: Oil Tankers

Ten is the average number of oil tankers arriving each day at a certain port. The facilities at the port can handle at most 15 tankers per day. What is the probability that on a given day tankers have to be turned away?

Solution:

Letbe the number of tankers arriving each day. We need to find:

Theorem:

Both the mean and the variance of the Poisson distribution

are .

Nature of the Poisson Probability Function

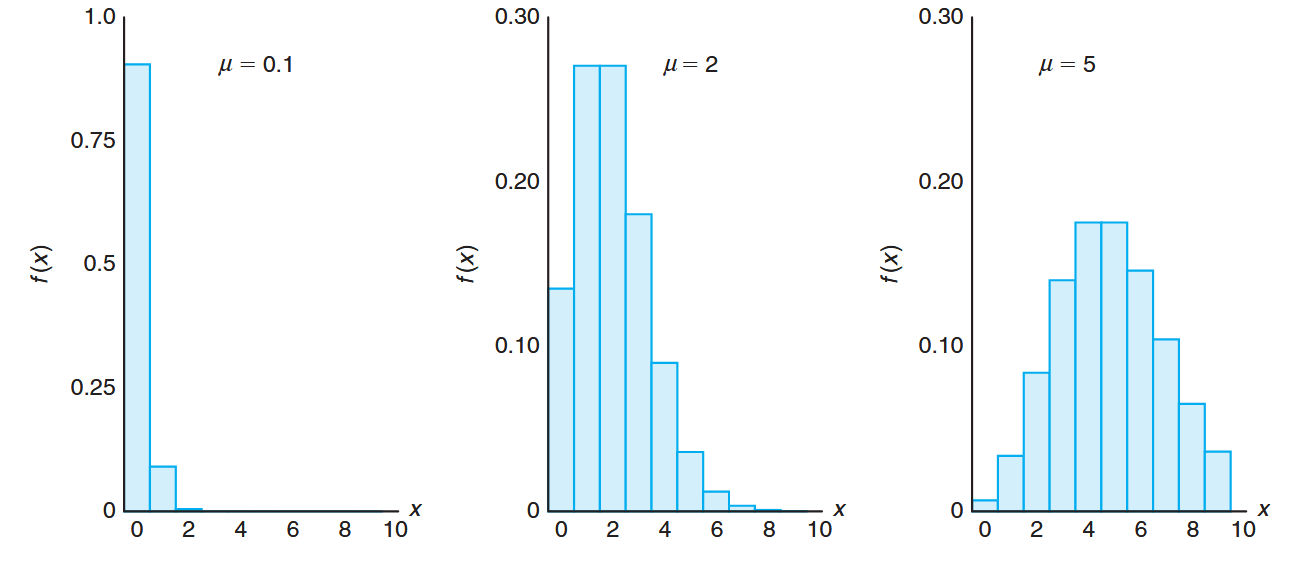

Like many discrete and continuous distributions, the form of the Poisson distribution becomes increasingly symmetric and bell-shaped as the mean grows larger. The probability function for different values of

- When

- When

- When

This behavior parallels what we see in the binomial distribution as well.

Poisson density functions for different means. (Walpole et al., 2017).

Approximation of Binomial Distribution by a Poisson Distribution

The Poisson distribution can be viewed as a limiting form of the binomial distribution. When the sample size

The independence among Bernoulli trials in the binomial case aligns with the independence property of the Poisson process. When

If

Theorem:

Let

be a binomial random variable with probability distribution . When , , and remains constant,

Example: Industrial Accidents

In a certain industrial facility, accidents occur infrequently. The probability of an accident on any given day is

, and accidents are independent of each other.

- What is the probability that in any given period of

days there will be an accident on exactly one day? - What is the probability that there are at most three days with an accident?

Solution:

Letbe a binomial random variable with and . Thus, . Using the Poisson approximation with :

Example: Glass Manufacturing

In a manufacturing process where glass products are made, defects or bubbles occur occasionally, rendering the piece unmarketable. On average,

in every items produced has one or more bubbles. What is the probability that a random sample of will yield fewer than items possessing bubbles? Solution:

This is essentially a binomial experiment withand . Since is very close to and is quite large, we can approximate with the Poisson distribution using: Hence, if

represents the number of bubbles:

Exercises

Question 1

A ship is shooting rockets at an enemy ship. The probability of success is

Part a

What is the probability that the first successful hit is on the fifth shot?

Solution:

This is a classic case of a geometric distribution, where

Part b

How many rockets should we shoot so that the probability of a successful hit is at least

Solution:

We can use both a geometric distribution and a binomial distribution to solve this problem.

Assuming the number of rockets shot is

Therefore, the probability of getting at least one successful hit after

This is a specific case of the more general CDF for geometric distributions:

This formula works because

We want to know when

Therefore, the ship must take a minimum of

We can also solve the problem using a binomial distribution. Here, we model the total number of successes in

We go the exact same inequality from before.

Part c

Given the first

Solution:

Denoting

Which is like saying

Therefore, all we need to calculate is:

Question 2

In a coffee shop there are

What is the probability that at most

Solution:

Denoting:

Using a hypergeometric distribution, we want to find: